Biography

Dr. Zhang is a full-time research scientist at Beijing Institute for General Artificial Intelligence (BIGAI). He received his Ph.D. degree (‘19) from Beijing Institute of Technology and also worked as a visiting Ph.D. student at the Center for Vision, Cognition, Learning, and Autonomy (VCLA) of UCLA. His research interest is currently focused on reinforcement learning, embodied artificial intelligence, autonomous robots, and symmetrical reality.

Open PhD Positions for 2026 Fall: (Update April 27, 2025) I am seeking outstanding candidates for the Joint PhD Program with Beijing Institute of Technology, Beihang University, Beijing Normal University, Zhejiang University, and many other leading institutes. If you have a strong academic background and would like to join our team through this doctoral program, please feel free to contact me! (zlzhang@bigai.ai)

I apologize that I may not be able to reply to every message, but please believe that your excellence will ultimately make you stand out!

- Artificial General Intelligence

- Autonomous Robots

- Human-Computer Interaction

- Mixed/Virtual Reality

- Symmetrical Reality

-

PhD in Optical Engineering, 2019

Beijing Institute of Technology

-

Joint PhD in Statistics, 2019

University of California, Los Angeles

-

BEng in Electronic Science&Technology, 2013

Beijing Institute of Technology

Recent Publications

Contact

Please feel free to contact me at office hours.

- zzlyw10@gmail.com

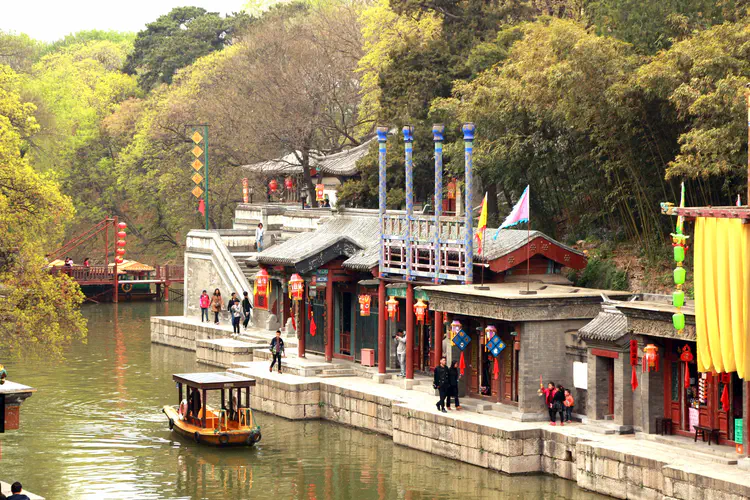

- 2 Summer Palace Road, Beijing 100080

-

Weekday 09:00 to 18:00

Weekend 12:00 to 16:00

![[TOMM 2024] Demonstrative Learning for Human-Agent Knowledge Transfer](/publication/journal_tomm_demolearing/featured_hu4ef834a469ec20af1308e4799770a6e2_2370393_150x0_resize_lanczos_1.gif)

![[MM 2024] In Situ 3D Scene Synthesis for Ubiquitous Embodied Interfaces](/publication/conf_mm24_sceneagent/featured_huf1924411ae8d9827f250a8354ff40d82_3680065_150x0_resize_lanczos_1.gif)

![[ICLR 2024] Bongard-OpenWorld: Few-Shot Reasoning for Free-form Visual Concepts in the Real World](/publication/conf_iclr24_bongardow/featured_hu691f13b767efc7075ac4dbb20cbf2a7b_217853_31e65263a9b94119755ed880e5e440d1.webp)

![[VR 2024] On the Emergence of Symmetrical Reality](/publication/conf_vr24_sr/featured_hu4c0a6800d0a3e47f4110cd046f8a2fdf_531344_8f5d26de6f8fd3b309a39310e3a43954.webp)

![[TOG/SiggraphAsia 2023] Commonsense Knowledge-Driven Joint Reasoning Approach for Object Retrieval in Virtual Reality](/publication/journal_tog_object_retrieval/featured_hua8e94fb835dfcf00774f2d3fb8b1e34d_2809041_2092a48d856670b610dd46f1298898ec.webp)

![[UIST 2023] Neighbor-Environment Observer: An Intelligent Agent for Immersive Working Companionship](/publication/conf_uist23_neo/featured_hub6bc94d56f1d41b58d9e2ccd62b93e6d_546244_8084b8e886e4731283228ebab78f3384.webp)

![[Engineering 2023] The tong test: Evaluating artificial general intelligence through dynamic embodied physical and social interactions](/publication/journal_engineering_tongtest/featured_hu9dadddc4766677badb59d1e9a3976b01_120795_d64e92635c4fd02dbed031474b10e597.webp)

![[Virtual Reality 2023] DexHand: dexterous hand manipulation motion synthesis for virtual reality](/publication/journal_virtualreality_dexhand/featured_hu476a5a8f8ace4a4e55ff77ca24ad8c28_207706_0e1c24ce2366a306b517c71f4b29ecf8.webp)

![[VR 2023] Building symmetrical reality systems for cooperative manipulation](/publication/conf_vr23_building_sr_system/featured_hu31500a0fb81e8a1de2e8578eca610fd6_311883_d3ba8424fde8488f4a8ae56da23f77c1.webp)

![[Engineering 2023] A reconfigurable data glove for reconstructing physical and virtual grasps](/publication/journal_engineering_grasp/featured_hu77153321ae4daccaac199ad6fbfd6536_206746_d30ee2f762f83c1007978fbc148c9374.webp)