[VR 2020] Extracting and transferring hierarchical knowledge to robots using virtual reality

Abstract

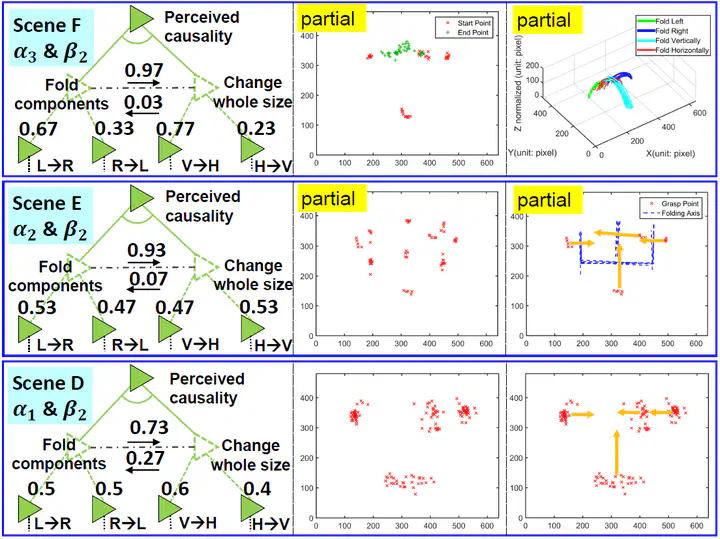

We study the knowledge transfer problem by training the task of folding clothes in the virtual world using an Oculus Headset and validating with a physical Baxter robot. We argue such complex transfer is realizable if an abstract graph-based knowledge representation is adopted to facilitate the process. An And-Or-Graph (AOG) grammar model is introduced to represent the knowledge, which can be learned from the human demonstrations performed in the Virtual Reality (VR), followed by the case analysis of folding clothes represented and learned by the AOG grammar model.

Type

Publication

In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW)

Click the Cite button above to import publication metadata into their reference management software.