[IROS 2019] Toward an efficient hybrid interaction paradigm for object manipulation in optical see-through mixed reality

Abstract

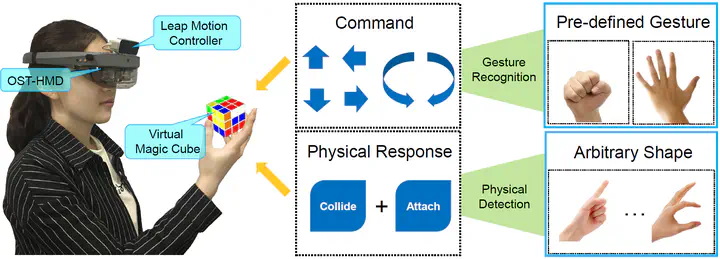

Human-computer interaction (HCI) plays an important role in the near-field mixed reality, in which the hand-based interaction is one of the most widely-used interaction modes, especially in the applications based on optical see-through head-mounted displays (OST-HMDs). In this paper, such interaction modes as gesture-based interaction (GBI) and physics-based interaction (PBI) are developed to construct a mixed reality system to evaluate the advantages and disadvantages of different interaction modes. The ultimate goal is to find an efficient hybrid paradigm for mixed reality applications based on OST-HMDs to deal with the situations that a single interaction mode cannot handle. The results of the experiment, which compares GBI and PBI, show that PBI leads to a better performance of users regarding their work efficiency in the proposed two tasks. Some statistical tests, including T-test and one-way ANOVA, have also been adopted to prove that the difference regarding the efficiency between different interaction modes is significant. Experiments for combining both interaction modes are put forward in order to seek a good experience for manipulation, which proves that the partially-overlapping style would help to improve work efficiency for manipulation tasks. The experimental results of the proposed two hand-based interaction modes and their hybrid forms can provide some practical suggestions for the development of mixed reality systems based on OST-HMDs.